In one of my posts on type level meta programming in Scala the question of Turing completeness came up already. The question is whether Scala’s type system can be used to force the Scala compiler to carry out any calculation which a Turing machine is capable of. Various of my older posts show how Scala’s type system can be used to encode addition and multiplication on natural numbers and how to encode conditions and bounded loops.

Motivated by the blog post More Scala Typehackery which shows how to encode a version of the Lambda calculus which is limited to abstraction over a single variable in Scala’s type system I set out to further explore the topic.

The SKI combinator calculus

Looking for a calculus which is relatively small, easily encoded in Scala’s type system and known to be Turing complete I came across the

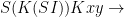

SKI combinator calculus. The SKI combinators are defined as follows:

,

,

,

,

.

.

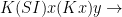

They can be used to encode arbitrary calculations. For example reversal of arguments. Let  . Then

. Then

.

.

Self application is used to find fixed points. Let  for some combinator

for some combinator  . Then

. Then  . That is,

. That is,  is a fixed point of

is a fixed point of  . This can be used to achieve recursion. Let

. This can be used to achieve recursion. Let  be the reversal combinator from above. Further define

be the reversal combinator from above. Further define

for some combinator

for some combinator  and

and

.

.

That is, combinator  is the combinator obtained by applying its argument to the combinator

is the combinator obtained by applying its argument to the combinator  . (There is a bit of cheating here: I should actually show that such combinators exist. However since the SKI calculus is Turing complete, I take this for granted.) Now let

. (There is a bit of cheating here: I should actually show that such combinators exist. However since the SKI calculus is Turing complete, I take this for granted.) Now let  be

be  in

in  from above (That is we have

from above (That is we have  now). Then

now). Then

and by induction

.

.

Type level SKI in Scala

Encoding the SKI combinator calculus in Scala’s type system seems not too difficult at first. It turns out however that some care has to be taken regarding the order of evaluation. To guarantee that for all terms which have a normal form, that normal form is actually found, a lazy evaluation order has to be employed.

Here is a Scala type level encoding of the SKI calculus:

trait Term {

type ap[x <: Term] <: Term

type eval <: Term

}

// The S combinator

trait S extends Term {

type ap[x <: Term] = S1[x]

type eval = S

}

trait S1[x <: Term] extends Term {

type ap[y <: Term] = S2[x, y]

type eval = S1[x]

}

trait S2[x <: Term, y <: Term] extends Term {

type ap[z <: Term] = S3[x, y, z]

type eval = S2[x, y]

}

trait S3[x <: Term, y <: Term, z <: Term] extends Term {

type ap[v <: Term] = eval#ap[v]

type eval = x#ap[z]#ap[y#ap[z]]#eval

}

// The K combinator

trait K extends Term {

type ap[x <: Term] = K1[x]

type eval = K

}

trait K1[x <: Term] extends Term {

type ap[y <: Term] = K2[x, y]

type eval = K1[x]

}

trait K2[x <: Term, y <: Term] extends Term {

type ap[z <: Term] = eval#ap[z]

type eval = x#eval

}

// The I combinator

trait I extends Term {

type ap[x <: Term] = I1[x]

type eval = I

}

trait I1[x <: Term] extends Term {

type ap[y <: Term] = eval#ap[y]

type eval = x#eval

}

Further lets define some constants to act upon. These are used to test whether the calculus actually works.

trait c extends Term {

type ap[x <: Term] = c

type eval = c

}

trait d extends Term {

type ap[x <: Term] = d

type eval = d

}

trait e extends Term {

type ap[x <: Term] = e

type eval = e

}

Eventually the following definition of Equals lets us check types for equality:

case class Equals[A >: B <:B , B]()

Equals[Int, Int] // compiles fine

Equals[String, Int] // won't compile

Now lets see whether we can evaluate some combinators.

// Ic -> c

Equals[I#ap[c]#eval, c]

// Kcd -> c

Equals[K#ap[c]#ap[d]#eval, c]

// KKcde -> d

Equals[K#ap[K]#ap[c]#ap[d]#ap[e]#eval, d]

// SIIIc -> Ic

Equals[S#ap[I]#ap[I]#ap[I]#ap[c]#eval, c]

// SKKc -> Ic

Equals[S#ap[K]#ap[K]#ap[c]#eval, c]

// SIIKc -> KKc

Equals[S#ap[I]#ap[I]#ap[K]#ap[c]#eval, K#ap[K]#ap[c]#eval]

// SIKKc -> K(KK)c

Equals[S#ap[I]#ap[K]#ap[K]#ap[c]#eval, K#ap[K#ap[K]]#ap[c]#eval]

// SIKIc -> KIc

Equals[S#ap[I]#ap[K]#ap[I]#ap[c]#eval, K#ap[I]#ap[c]#eval]

// SKIc -> Ic

Equals[S#ap[K]#ap[I]#ap[c]#eval, c]

// R = S(K(SI))K (reverse)

type R = S#ap[K#ap[S#ap[I]]]#ap[K]

Equals[R#ap[c]#ap[d]#eval, d#ap[c]#eval]

Finally lets check whether we can do recursion using the fixed point operator from above. First lets define  .

.

// b(a) = S(Ka)(SII)

type b[a <: Term] = S#ap[K#ap[a]]#ap[S#ap[I]#ap[I]]

Further lets define some of the  s from above.

s from above.

trait A0 extends Term {

type ap[x <: Term] = c

type eval = A0

}

trait A1 extends Term {

type ap[x <: Term] = x#ap[A0]#eval

type eval = A1

}

trait A2 extends Term {

type ap[x <: Term] = x#ap[A1]#eval

type eval = A2

}

Now we can do iteration on the type level using a fixed point combinator:

// Single iteration

type NN1 = b[R]#ap[b[R]]#ap[A0]

Equals[NN1#eval, c]

// Double iteration

type NN2 = b[R]#ap[b[R]]#ap[A1]

Equals[NN2#eval, c]

// Triple iteration

type NN3 = b[R]#ap[b[R]]#ap[A2]

Equals[NN3#eval, c]

Finally lets check whether we can do ‘unbounded’ iteration.

trait An extends Term {

type ap[x <: Term] = x#ap[An]#eval

type eval = An

}

// Infinite iteration: Smashes scalac's stack

type NNn = b[R]#ap[b[R]]#ap[An]

Equals[NNn#eval, c]

Well, we can 😉

$ scalac SKI.scala

Exception in thread "main" java.lang.StackOverflowError

at scala.tools.nsc.symtab.Types$SubstMap.apply(Types.scala:3165)

at scala.tools.nsc.symtab.Types$SubstMap.apply(Types.scala:3136)

at scala.tools.nsc.symtab.Types$TypeMap.mapOver(Types.scala:2735)